Functional programming concepts such as the State monad and the Traversable type class can be powerful tools for thinking about common software engineering problems. As with any abstractions, these ideas may seem unfamiliar or opaque in the absence of concrete examples. The goal of this post is therefore to motivate and contextualize a “stateful traversal” design pattern by working through the specific problem of generating synthetic data points drawn from a simple statistical model.

AR(p) modeling

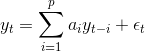

The p-th order linear autoregressive model AR(p) assumes that data point $$y_t$$ is generated according to the equation

where the $$a_i$$ are the fixed autoregressive coefficients and $$\epsilon_t$$ are the iid Gaussian noise terms. This is a basic and foundational model in econometrics and time series analysis.

Data generation

Say we have some model estimation code which we would like to sanity test against synthetic data points generated by a known model. First, we note that the $$\epsilon$$ terms are, by definition, totally independent of anything else. We can therefore simply sample $$N$$ of these to populate an $$\epsilon$$ vector.

The next step is a bit more involved. While we could compute each $$y_t$$ independently using only the previous noise terms $$\epsilon$$, this calculation would be $$O(N^2)$$ and a bit awkward. A more intuitive approach might be to sweep a pointer over the $$\epsilon$$ array and our (to-be-populated) $$y$$ array, using pointer/index arithmetic to select the appropriate previous terms for our calculation of each $$y_t$$.

This simple diagram shows how we can slide a function $$f$$ with fixed coefficients a over our previously generated $$y$$ and iid $$\epsilon$$.

An unfortunate aspect of this design is that it is mixes inputs and outputs in a way that could easily lead to programming errors. Also, the pointer arithmetic trick couples us to this exact problem and somewhat obscures what we’re really doing: threading the dynamic evolution of some state (here, the trailing window) through the course of our data-generating process. To make the latter point more concrete, consider the bookkeeping complexity that would be entailed by the extension of this approach to models with a richer notion of state, such as an ARIMA model or an Infinite Hidden Markov Model.

This motivates an alternative implementation which explicitly models the evolution of some state as we generate our data.

State-threading approach

The idea here is that the AR(p) equation above tells us how to get a mapping from some state of type S (window $$[y_{t-p},\ldots,y_{t-1}]$$) and an input type I (noise $$\epsilon_t$$) to a new output of type O (output $$y_t$$) and a new state of type S (new window $$[y_{t-(p-1)},\ldots,y_t]$$). As a Scala type signature this would look something like (S,I) => (S,O).

The above diagram shows the simplest version of this idea, where $$g$$ is nearly identical to $$f$$, except with an additional output for the new state S.

We can simplify this a bit via the concept of partial application. We note that $$ a $$ is fixed across all evaluations of our function, so we can fix that parameter to get $$g(y,\epsilon_t)$$, as shown in this diagram.

Finally, we combine partial application with the functor property of our $$\epsilon$$ sequence by mapping partial application of our function over $$\epsilon$$ to get separate functions $$g_t(y)$$ for each individual position $$t$$. Our function now simply maps from a previous window S to a new window S and an output value O, as shown in this diagram

This re-arrangement has stripped our sequential data-generating computation down to its essence: given a sequence of iid noise terms $$\epsilon$$, we almost directly encode our mathematical definition as a function which takes a sliding window state S as its input, and returns a computed value O and a new sliding window state S as output, therefore having type signature S => (S,O).

Plug-and-play

All that remains is to construct the plumbing necessary to put it all together. As luck would have it, there is a common functional programming idiom for traversing a data structure while simultaneously accumulating state and transforming individual elements based on that state. This allows us to simply supply our transformed function of $$g$$ along with an initial window state (say, all zeros).

This is somewhat remarkable. The AR(p) equation tells us how to go from a state (the window of previous $$y_t$$) and an input ($$\epsilon_t$$) to a new state (the new window) and an output (the new $$y_t$$), and we can directly plug exactly this information into some generic machinery to achieve our desired result. So how does it work?

State monad

Clearly we’ve buried some crucial mechanics – let’s take a look at StatefulGeneration.generate():

The context bounds assert the availability of utility type class instances for our container type G[_] (here, List), which are supplied by scalaz.std.list.listInstance and retrieved via the implicitly statements.

The first piece of machinery invoked in the above example is functor.map(gs)(State.apply). Recall that gs has type List[Window => (Window,Double)], that is, a sequence of functions that each map an input state to a new output state along with an output value. This simple and abstract definition of a stateful computation occupies a special place in the functional programming world, known as the State Monad.

There exists a vast amount of instructional content on this topic which we shall not recapitulate here (you can find plenty on the web, for a nice example see “Learn You A Haskell” aka LYAH). Suffice it to say that for our purposes a State instance is a wrapper for a function that takes a state of type S as input, and returns some new state of type S along with an “output” value of type A, something like:

The “Monad” half of the name means that there exists a flatMap function over State which, loosely speaking, “chains” two of these computations, using the output S of one State as the input to the next and returning a new stateful computation. An example implementation and simple diagram are below, where State.run takes an initial state S as input and then executes the wrapped function:

Returning to our generate() function above, the snippet simply wraps each of our $$g_t$$ functions Window => (Window,Double) into a scalaz State, for which flatMap and a variety of other nice helper functions are already defined.

Traverse/Sequence

What about traverse.sequenceS[Window,Double]? Let’s inspect the type signature of sequenceS, substituting in the actual concrete types we’re using:

Verbally, this translates to transforming a List of stateful computations with Window state and Double output into a single stateful computation with Window state and List[Double] output.

Informally, sequenceS is able to accomplish this by using the flatMap machinery defined in the previous section to chain all of the individual stateful computations into one. Furthermore, sequenceS also transforms the “output” variable of the resulting stateful computation from Double to List[Double], a list of all the individual outputs, which is exactly the final output we originally wanted. In general, Sequence allows us to “commute” two higher-order types, going from G[F[A]] to F[G[A]]. In this specific case we are transforming List[State[Window,Double]] to State[Window, List[Double]].

The End

Finally, we run it by supplying an all-zeros window as the initial state. The resulting value is of type (Window,List[Double]), containing both the final window state and the list of all output values. We retrieve the latter via ._2 and declare victory!

What have we gained by all this? First, we have pushed the work of incidental wiring into well-defined library functions, leaving our custom code focused solely on the particulars of our problem: the $$g()$$ function which emits a value and updates the state. This compact surface area for custom logic should be easier to both understand and test. Second, notice that StatefulGeneration.generate() is polymorphic in the type of the data container G[_], subject to the availability of Traverse and Functor type class instances. Finally, the stateful traversal and transformation of sequential data structures is ubiquitous in software, making this design pattern a valuable addition to one’s “vocabulary” for understanding and reasoning about code.

References

- This example was briefly discussed in my talk “Economical machine learning

via functional programming” (slides, video) at the Big Data Scala by the Bay conference in Oakland. - The code examples use the scalaz library, for which the “Learning Scalaz” blog series is an invaluable companion.

- The functional programming concepts discussed here are heavily influenced by the excellent book Functional Programming in Scala.

- My reference for the AR(p) process is Applied Econometric Time Series.

- A more thorough look at some of these ideas can be found in Eric Torreborre’s epic blog post on the “Essence of the Iterator Pattern” paper by Jeremy Gibbons and Bruno C. d. S. Oliveira. Also see other blog discussion here, here, and here.

Any mistakes are of course my own, and I’d love to hear about them!